06.03.2025 | Blog Which LLM is the right one? A guide for companies

1. Size isn't everything: smaller models as an alternative

Larger models such as GPT-5.2, Gemini 3.0 or Claude 4.5 offer impressive capabilities, but they are not always essential. Smaller models are often sufficient, especially for chatbot applications in combination with search software. In this case, the model receives the knowledge it needs to answer questions from the search software. So big is not necessarily better - smaller models can deliver comparable results of high quality depending on the use case but are faster and more cost-efficient.

However, if you want to use the external knowledge of an LLM, you need large models with which more complex use cases, such as code generation for developers, are possible.

2. Language support: Not every model understands German

There are impressive vision language models that can process text from image files without OCR (=Optical Character Recognition). These models, as well as LLMs, are often trained in languages such as English or Chinese. If you need a model for German-language content, you should therefore check whether the selected LLM can handle German texts effectively: Does the vision model recognize umlauts (ÄÖÜ), for example, or can the text model process German grammar, sentence structure, etc. well? Here too, it is important to evaluate the model according to the use case.

3. Context length: How much information can be processed?

Another criterion when choosing a model is the context length. Some models are able to process large amounts of information at once (e.g. millions of tokens). This also depends on the use case. For example, a large context length is particularly useful when summarizing long documents. For other scenarios, however, a smaller model is sufficient. For classic search queries, for example, the relevant content is extracted in advance and passed on to the model for answering so that it does not have to process entire documents. This means that a model with a large context length is not always the most efficient choice.

4. Open source vs. proprietary models: Which solution fits?

Open-source models such as gpt-oss, Mistral Magistral/Ministral or Qwen 3 are adaptable, transparent and offer companies the opportunity to operate LLMs on their own hardware (on-premises) and thus minimize data protection risks. These are universal models with a balanced mix of language capability, speed and cost efficiency.

Proprietary models do not require their own hardware as they are operated in the cloud. Although the paid versions of the models do not use user data for training, some organizations still prefer on-prem solutions to maintain full control over their data.

5. Security and costs: cloud or on-prem?

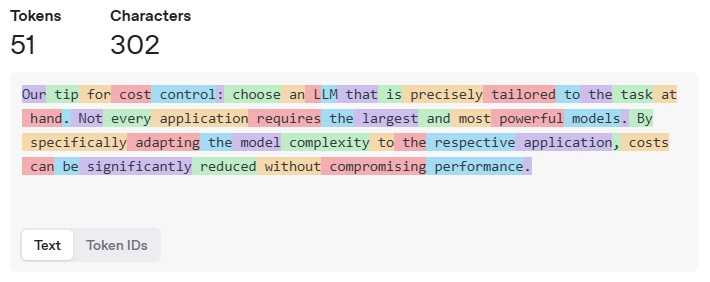

This leads to the question of whether to run the model yourself on your own hardware or opt for a cloud solution (Software as a Service = SaaS). Cloud models such as GPT-4o are very powerful, but require careful cost control, as billing is based on token consumption. Depending on usage, this can be expensive.

Purchasing your own LLM infrastructure can be worthwhile for organizations that want to maintain independence and data protection in the long term. Alternatively, models such as GPT-4o can be hosted securely via Microsoft Azure without having to purchase your own hardware. The token-based costs must also be considered here.

An example: This is how GPT-4o divides the text into tokens. (Source: https://platform.openai.com/tokenizer)

6. Digression - DeepSeek: Opportunity or risk?

DeepSeek is an open-source model from China that has recently caused a stir with its innovative architecture and efficient computing power. It shows that even smaller models can be powerful with less computing power. It is well-suited for solving complex tasks and, as a reasoning model, explicitly describes its “thought process” but consumes more tokens as a result. There is data protection concerns with the freely available version, as user data can be used for training. In a self-hosted operation, control over the data would be guaranteed. Reasoning models are “deductive” AI models and are designed to imitate logical thought processes. They reflect on tasks, analyze problems step by step and provide logically justified answers.

Conclusion: Get professional advice

As a provider of enterprise search software with an AI assistant, we follow the development of new models with excitement. We test these models, objectively evaluate their strengths and weaknesses and know what is best suited for which use case and for which IT infrastructure. We help you to find the right model for your use case - whether standard model or “bring your own model”.

Background info: Tokens, context windows and costs

Cloud-based LLMs charge per token. Providers such as OpenAI (GPT-4o), Google (Gemini), and Microsoft (Azure OpenAI Service) calculate usage based on the number of tokens processed—both in the input (prompt) and the generated output.

For locally operated open-source models, there is no direct token-based billing. However, costs arise from hardware (e.g., GPUs or server capacity), power consumption, and maintenance. In the long run, this can be more cost-effective, particularly when usage is high, or data protection is a priority.

Related articles

iAssistant - Fast & precise answers

The author

Daniel Manzke