18.12.2015 | Blog New High Quality Search and Linguistics for iFinder5 elastic

In part 1 of this blog article I talk about advantages for the standard user and how we are able to reduce configuration efforts. In part 2 I am going to talk about new features for experts such as our powerful new Near-Queries, Search Modes and future plans based on our concept of the Typed Index [1]

Tokenization: Why it matters and why our users don’t have to care

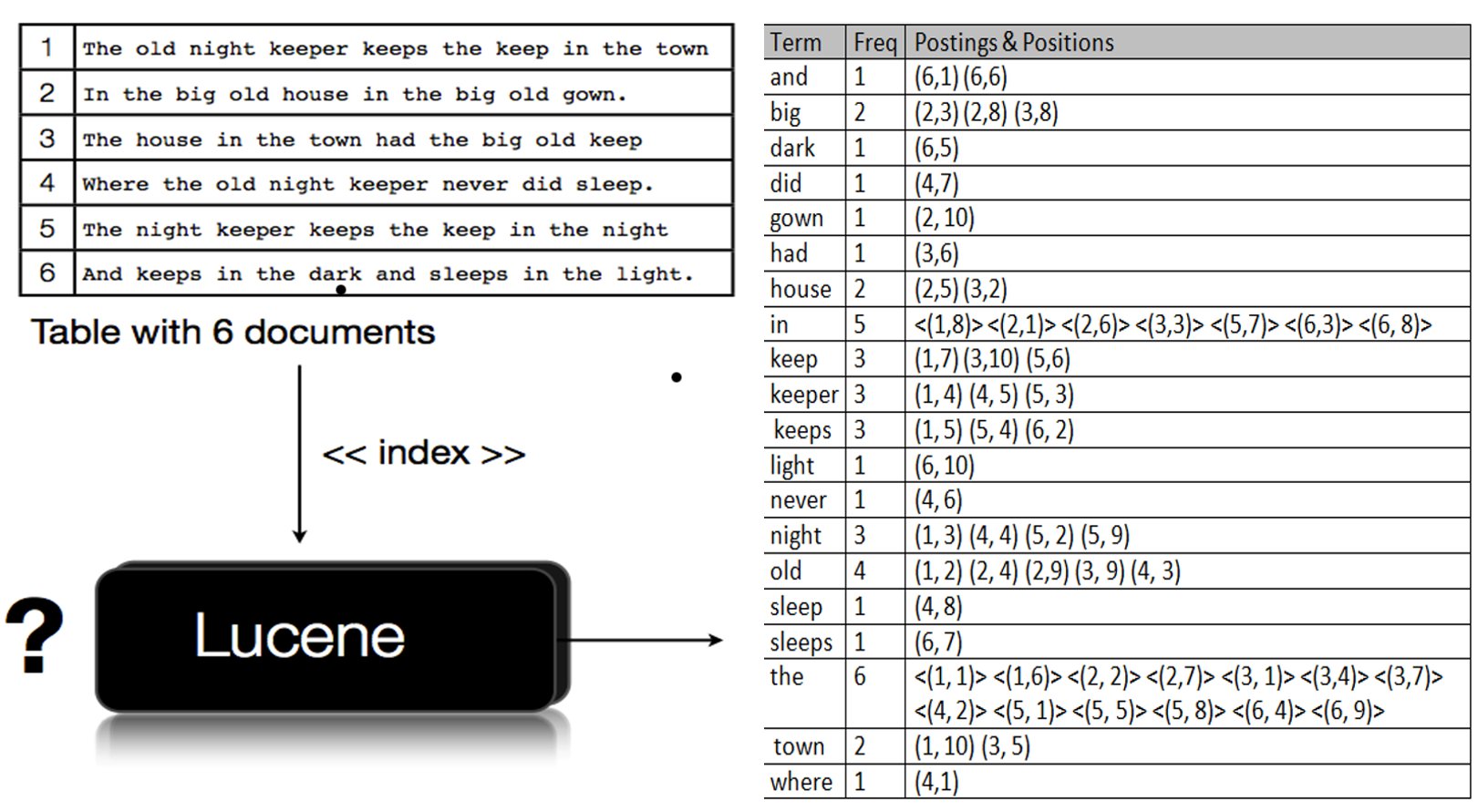

Search functionality provided by a search engine is different from text search people know e.g. from their text editors or office software. In your office software you can search for any substring of your text. The more text you have, the longer your search will take. The computational complexity for this kind of search is linear in the overall text size. Therefore, it is intractable for big document sets. Search engines like iFinder use a data structure called inverted file index (see Fig 1) to implement search efficiently for huge document sets.

Fig 1: Inverted File Index

The inverted file index consists of a term dictionary, which is an alphabetically sorted list of all words occurring within the document set, and posting lists for each word. A posting list is a list of all documents (their numbers), in which the word occurs together with the position of the word within the document. The good thing about the inverted file index is, that it allows to search for words from the term dictionary and combinations of them with high efficiency, even for huge document sets. The bad thing is, that it allows to search only for words that are in the term dictionary [1].

Every document / text is a stream of characters. The process that breaks it into words is called tokenization which is often followed by one or several normalization steps. The following example shows how tokenization influences and limits the kind of words for which we can search.

Text: “Send questions for OpenOffice to support@fufu.org”

Words generated by a standard tokenization & lowercase normalization: send, questions, for, openoffice, to, support@fufu.org

→ No search results for “support” since it’s not a word in the term dictionary

Words generated by a splitting tokenizer and lowercase normalization: send, questions, for, open, office, to, support fufu org

→ No search results for “openoffice” (all lowercase) since there is no simple algorithmic way to know that it should be splitted [2]

Though a search engine can never provide efficient search for arbitrary substrings we come very close to that. We decided to use a tokenizer that allows very complex tokens such as email addresses, but we additionally split complex tokens into their parts and also add these parts as words to our term dictionary.

We not only split at special signs such as “@”, “-”, etc.., we also split CamelCase tokens such as OpenOffice and we split tokens when letters and numbers are adjacent. In this way users do not have to distinguish between “iphone6” and “iphone 6” when they search for information on their latest new iphone. Whatever reasonable assumption about words our users make, they find them with our search technology.

[1] With Fuzzy-, Wildcard-, or Regex-Queries you can search for words that are not in the term dictionary. However, this kind of search is often very inefficient since it requires scanning big chunks of the term dictionary and the whole index. Furthermore, it requires some expert knowledge about the syntax of these query types.

[2] "OpenOffice" could only be splitted based on a lexicon ressource that contains "open" and "office" as valid entries.

Word Normalization: Why we need more than one normal form for a word

In the last section about tokenization we also mentioned word normalization. We used a lowercase normalization in the example. Usually lowercase normalization is a good idea. However, sometimes case matters. There is a big company named “MAN” and we want to be able to distinguish a search for “MAN” from a search for “man”. Therefore, we also retain exact words besides normalized forms in our index.

In many languages we have diacritics or umlaut signs e.g. in “Amélie” or “Müller”. Very often, people do not type these special characters, especially when they type queries. Therefore, it is a reasonable normalization step to remove them. On the other hand, there might be a guy named “Muller” in our company and another guy named “Müller”. So we should still be able to distinguish both names in our search. Furthermore, there is more than one diacritics normal form. In Germany people tend to normalize “Müller” to “Mueller” while in other countries people will use “Muller”. We therefore have more than one diacritics normal form in our index.

A very import kind of word normalization concerns inflections. In many languages words occur in different forms in order to express grammatical roles such as tense, person, number, case, etc. If you search for “mouse” you also want to find “mice”.

Usually simple algorithmic stemmers are used to strip off typical suffixes of words in order to achieve this kind of normalization. However, these approaches tend to over- and understem.

Examples for stemming: going → godecoder, decoding, decodes → decod

Examples for overstemming: Messer → mess king → k

Examples for understemming:spoke → speak

IntraFind has developed high quality morphological lexicons for most European languages which deliver much more accurate normalization than algorithmic stemmers. For a comparison of simple stemmers and morphological normalization see [3]. Some examples of lemmatization are shown in the following table.

English:

going → go (Verb)

bought → buy (Verb)

bags → bag (Noun)

bacteria → bacterium (Noun)

German:

lief → laufen (Verb)

rannte → rennen (Verb)

Bücher → Buch (Noun)

Taschen → Tasche (Noun)

Even this kind of high quality normalization is not always desirable. Our search engine also allows wildcard, fuzzy and regex searches. If the user searches for “mou*” (any term that starts with “mou”) he does not expect to find a document containing the word “mice”. This means that besides morphologically normalized words we also need the original forms at least for wildcard, fuzzy or regex queries.

Phonetic normalization can also be very useful, especially for names. There are often different spelling variations for foreign names and a phonetic normal form allows to abstract from these more precisely than a simple fuzzy search.

Example for phonetic Normalization: Muhamed → MHMD Mohammed → MHMD

We integrated phonetic normalizations such as Soundex, Metaphone, Double Metaphone, Cologne Phonetic and many more. They can easily be activated and since they are tuned for special languages we can activate them for each language individually. Since phonetic normalization makes most sense for proper names, we can optionally exclude normal words (identified by our morphological lexicons) from phonetic normalization making phonetic normal forms much more useful.

Each of these normal forms has its justification and is used for its own purposes. None of them is revolutionary new. Many of them are available as separate Lucene analyzers. We have integrated all these normal forms into one Lucene / Solr / Elasticsearch analyzer and all normal forms share one Lucene field. By using prefixes we are still able to distinguish different normal forms from each other. We call this approach the Typed Index (see e.g. [1], [2]). This approach has two key advantages.

First, it reduces configuration efforts considerably. We no longer need to specify different index fields and analyzers in complex mappings or index schemas and we don’t have to configure a complex query analysis. We only need one content field and one analyzer. Furthermore, we provide a query parser that allows to specify search modes for expert users, if they explicitly want to do exact search, search on lowercase / diacritics normal forms or on morphological normalized terms, and that implements a default search mode using all normal forms with configurable boosts for each of them. In this way, search for the non-expert user can be configured so that all possible matches are provided, but exact matches (MAN vs. man) are ranked much higher.

Second, since all normal forms are in one field, we can use word proximity information and can combine different normal forms in near or phrase queries. We can e.g. search for the words “book” and “Mohamed” close to each other with NEAR/2(book AND Mohamed) and this query will match a document containing “books of Muhammed”. For this match to work neither morphological normalization nor phonetic normalization alone would suffice, since “book” and “books” are phonetically different and for “Mohamed” to match “Muhammed” we need phonetic normalization. Note that this kind of Near Query that combines different normal forms is only possible with our Typed Index approach.

Word decomposition and search

When introducing tokenization, I already talked about additionally splitting complex words into their parts. Besides the plain algorithmic splitting methods described above, we have a very sophisticated linguistic compound decomposition based on lexicons and complex heuristics. Compound splitting is very important for German, which uses complex compound nouns where other languages tend to use phrases.

Some examples of German compounds:

Kinderbuch means children’s book

Bundesfamilienministerium means Federal Ministry for Family Issues

With our compound decomposition we can split these words into their lemmata (semantic parts) and we can even distinguish between head and modifier parts. The head word of “children’s book” is “book”, because it’s a special kind of book, modified by the modifier “child”. Whenever we search for “Buch” we also get matches for “Kinderbuch”.

Depending on the boosting-configuration we can boost compound matches equally high, higher or lower than matches for individual words and we can boost matches with head words higher than matches with modifiers. This means that “Buchladen” (book store) could score lower than “Kinderbuch” when we search for “Buch”. A search for “Bundesfamilienminsterium” will also match “Ministerium des Bundes für Familie”, a variant of expressing the same concept in a way more similar to other languages and equally frequent in German.

By the way, we recognize paragraph, sentence, and general separator boundaries in documents and usually forbid such boundaries within compound matches, complex token matches, and phrase / near searches. “Kinderbuch” will only match with occurrences of “Kind” and “Buch” if these two parts are close to each other and e.g. not in different sentences.

Multiliguality and Mixed Language Documents

Morphological normalization, word decomposition, and even phonetic normalization are usually language specific. The traditional approach to do search in a multilingual environment works by determining a language for each document and selecting a language-specific analyzer based on the result. User Queries are usually expanded into all configured languages.

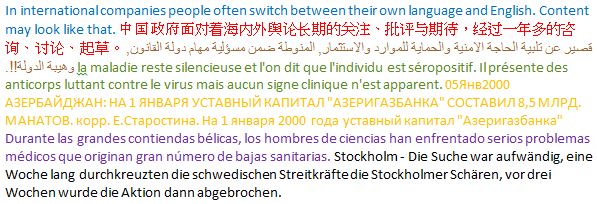

This approach has two disadvantages. It requires complex configuration (mappings, index schemata) and it cannot handle mixed language documents (emails in which users switch between languages, documents with an English abstract and French content, etc.). In my last blog article I talked about language identification and language chunking [4]. Our language chunker splits arbitrary text into chunks of the same language. See Fig 2 for an example.

Fig 2: Language Chunking: Different Chunks are marked with different colours.

We have integrated the language chunker into our analyzer. Document content is first splitted into chunks of the same language and for each chunk a language-specific analysis is started. All terms / words end up in the same field, language-specific normal forms are again distinguished by their prefix (typed index). This way, no additional configuration is needed and we can handle mixed-language documents in a natural way.

[1] “The Typed Index”, C. Goller, FOSDEM 2015, https://archive.fosdem.org/2015/schedule/event/the_typed_index/

[2] “The Typed Index”, C. Goller, Lucene Revolution 2013, Dublin, https://www.youtube.com/watch?v=X93DaRfi790

[3] “The difference between stemming and lemmatization”, U. Seisenberger, IntraFind Blog, http://www.intrafind.de/blog_en/the-difference-between-stemming-and-lemmatization-380

[4] Language Identification and Language Chunking, C. Goller, IntraFind Blog

The author

Dr. Christoph Goller